model

import torch.nn as nn

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

self.fc1 = nn.Linear(in_features=2, out_features=16)

self.fc_act1 = nn.ReLU()

self.fc2 = nn.Linear(in_features=16, out_features=32)

self.fc_act2 = nn.ReLU()

self.fc3 = nn.Linear(in_features=32, out_features=4)

def forward(self, x):

x = self.fc1(x)

x = self.fc_act1(x)

x = self.fc2(x)

x = self.fc_act2(x)

x = self.fc3(x)

return x

utils

from sklearn.datasets import make_blobs

import torch

from torch.utils.data import TensorDataset, DataLoader

import matplotlib.pyplot as plt

def get_dataset(N_SAMPLES, BATCH_SIZE, centers):

X_, y_ = make_blobs(n_samples=N_SAMPLES, centers=centers, n_features=2, cluster_std=0.5, random_state=0)

dataset = TensorDataset(torch.FloatTensor(X_), torch.LongTensor(y_))

data_loader = DataLoader(dataset, batch_size=BATCH_SIZE)

return data_loader, X_, y_

def get_device():

if torch.cuda.is_available():DEVICE = 'cuda'

elif torch.backends.mps.is_available(): DEVICE = 'mps'

else: DEVICE = 'cpu'

return DEVICE

def train(data_loader, N_SAMPLES, model, loss_function, optimizer, DEVICE):

epoch_loss, n_corrects = 0., 0

for X, y in data_loader:

X, y = X.to(DEVICE), y.to(DEVICE)

pred = model.forward(X)

loss = loss_function(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

epoch_loss += loss.item() * len(X)

pred = torch.argmax(pred, dim=1)

n_corrects += (pred == y).sum().item()

epoch_loss /= N_SAMPLES

epoch_acc = n_corrects / N_SAMPLES

return epoch_loss, epoch_acc

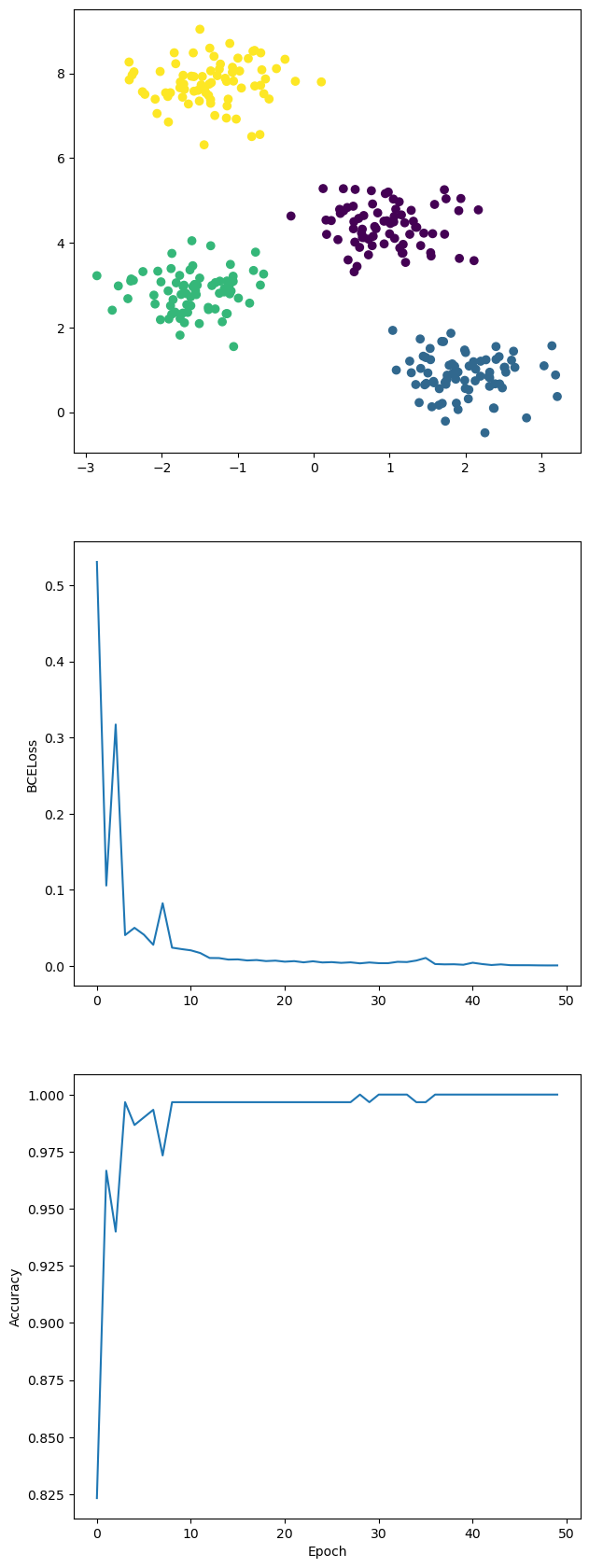

def vis_losses_accs(losses, accs, X_, y_):

fig, axes = plt.subplots(3, 1, figsize=(7, 21))

axes[0].scatter(X_[:,0], X_[:,1], c=y_)

axes[1].plot(losses)

axes[1].set_ylabel('BCELoss')

axes[2].plot(accs)

axes[2].set_ylabel('Accuracy')

axes[2].set_xlabel('Epoch')

main

from torch.optim import SGD

N_SAMPLES = 300

BATCH_SIZE = 8

EPOCHS = 50

LR = 0.2

centers = 4

data_loader, X_, y_ = get_dataset(N_SAMPLES, BATCH_SIZE, centers)

DEVICE = get_device()

model = Classifier().to(DEVICE)

loss_function = nn.CrossEntropyLoss()

optimizer = SGD(model.parameters(), lr=LR)

losses, accs = [], []

for epoch in range(EPOCHS):

epoch_loss, epoch_acc = train(data_loader, N_SAMPLES, model, loss_function, optimizer, DEVICE)

losses.append(epoch_loss)

accs.append(epoch_acc)

vis_losses_accs(losses, accs, X_, y_)

print(f'Loss : {losses[-1]}, Accuracy : {accs[-1]}\n')

result

'개발 > AI' 카테고리의 다른 글

| [Pytorch] GoogLeNet : 구현 연습 (0) | 2023.11.29 |

|---|---|

| [Pytorch] VGG11 논문 구현 코드 (0) | 2023.11.27 |

| [Pytorch] XOR 학습 (0) | 2023.11.20 |

| [Python] ANN : XOR - 2 layer 구현하기 (0) | 2023.11.16 |

| [Python] Affine, Sigmoid, BCELoss 구현 (0) | 2023.11.14 |